Gameplay Filters

Robust Zero-Shot Safety through Adversarial Imagination

The gameplay filter is a new class of predictive safety filters, offering a general approach for runtime robot safety based on game-theoretic reinforcement learning and the core principles of safety filters. Our method learns a best-effort safety policy and a worst-case sim-to-real gap in simulation, and then uses their interplay to inform the robot’s real-time decisions on how and when to preempt potential safety violations.

1. Learn from adversity

Our approach first pre-trains a safety-centric control policy in simulation, by pitting it against an adversarial environment agent that is simultaneously learning to steer the robot towards catastrophic failures (we call this Iterative Adversarial Actor Critic for Safety, or ISAACS). This escalation produces a robust robot safety policy that is remarkably hard to exploit, but also an estimate of the worst-case sim-to-real gap that the robot might encounter after deployment. The algorithm updates a safety value network (critic) and keeps a leaderboard of the most effective player policies (actors).

2. Never lose a game

At runtime, the learned player strategies become part of a safety filter, which allows the robot to pursue its task-specific goals or learn a new policy as long as safety is not in jeopardy, but intervenes as needed to prevent future safety violations.

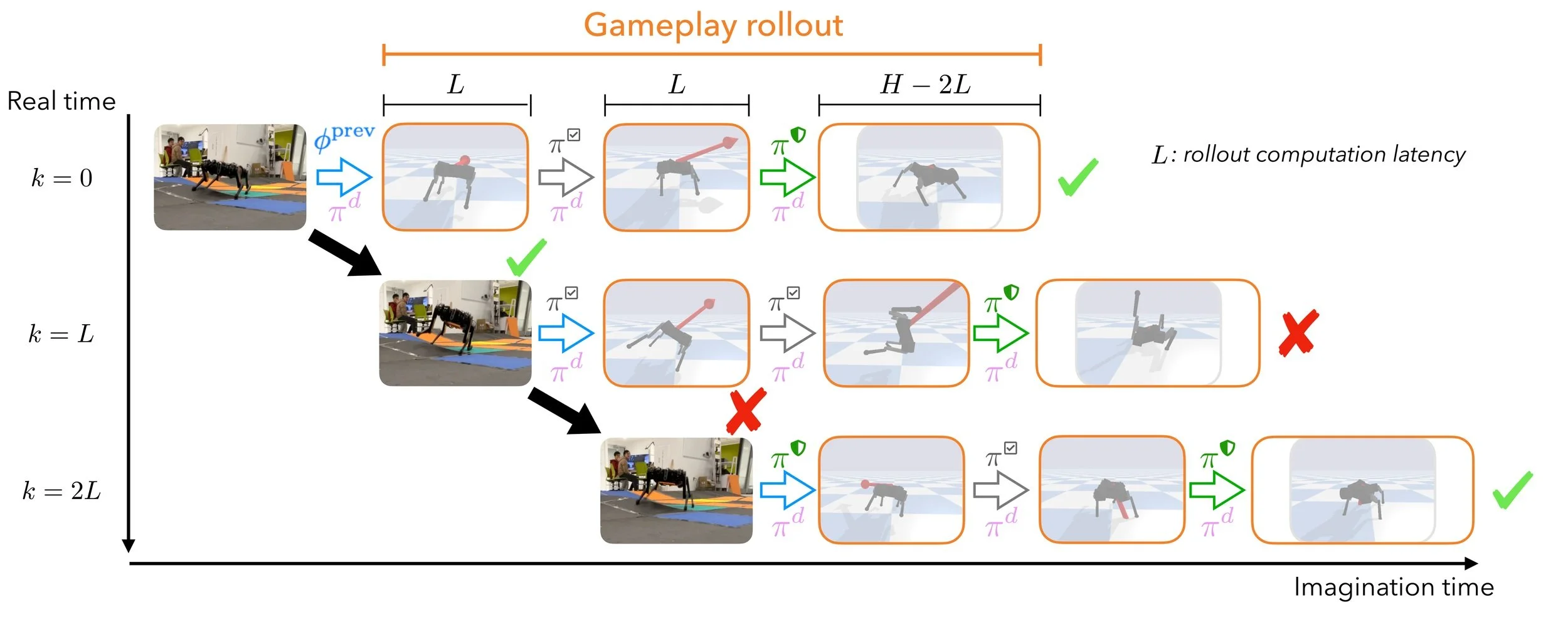

To decide when and how to intervene, the gameplay filter continually imagines (simulates) hypothetical games between the two learned agents after each candidate task action: if taking the proposed action leads to the robot losing the safety game against the learned adversarial environment, the action is rejected and replaced by the learned safety policy.

Scalable

The filter’s neural network makes it suitable for challenging robotic settings like walking on abrupt terrain and under strong forces.

General

A gameplay filter can be synthesized automatically for any robotic system. All you need is a (black-box) dynamics model.

Robust

The gameplay filter actively learns and explicitly predicts dangerous discrepancies between the modeled and real dynamics.

@inproceedings{

nguyen2024gameplay,

title={Gameplay Filters: Robust Zero-Shot Safety through Adversarial Imagination},

author={Duy P. Nguyen* and Kai-Chieh Hsu* and Wenhao Yu and Jie Tan and Jaime Fern{\'a}ndez Fisac},

booktitle={8th Annual Conference on Robot Learning},

year={2024},

url={https://openreview.net/forum?id=Ke5xrnBFAR}

}

Citation

Authors

This work has been supported in part by the Google Research Scholar Award and the DARPA LINC program. The authors thank Zixu Zhang for his help in preparing the Go2 robot for experiments.