Safe Occlusion-aware Autonomous Driving

via Game-Theoretic Active Perception

Robotic systems often need to plan trajectories through partially occluded environments, where unknown objects beyond the robot’s field of view could potentially threaten safety. How can robots guarantee safety with respect to these “invisible threats” without becoming unable to complete their tasks?

Out objective in this project is to allow autonomous vehicles to efficiently plan and execute safe trajectories that never result in a safety failure, given an explicit set of assumptions on the environment (e.g. maximum speed of objects), while eliminating unnecessary conservativeness by actively planning and exploiting future observations.

Our proposed game-theoretic active perception scheme keeps track of the set of possible states of a potential hidden object, and generates safe and efficient trajectory plans by accounting for the autonomous vehicle's future ability to (1) obtain new sensory information and (2) actively avoid collisions with any newly detected object.

How does it work?

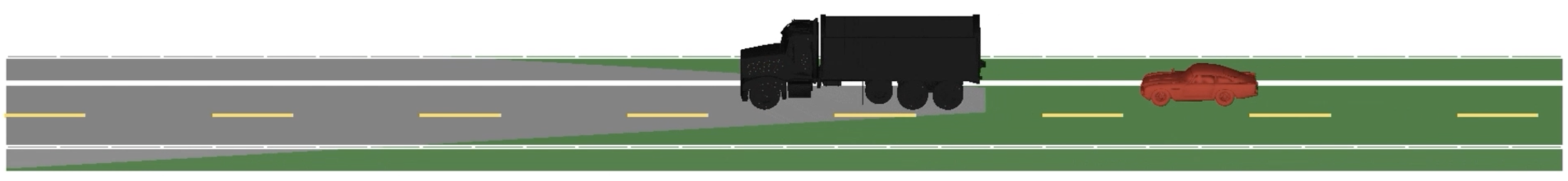

Imagine an autonomous car driving driving on a two-way road behind a slow truck. Is it safe for it to overtake?

Even if there are no objects in the autonomous car’s current field of view (which may multiple sensing modalities such as cameras and lidar), there could be an object approaching along the oncoming lane, currently occluded by the truck.

The autonomous car needs to reason about these potential yet-unseen objects. Based on its current and past observations, as well as a dynamical model describing how objects may move, it can compute the current forward hidden set, describing all positions and velocities that an object could have at the current time without having been detected. This set changes over time as new observations are made.

Evolution of the forward hidden set (blue) in x-y-v space (velocity is plotted on the vertical axis). Although oncoming vehicles move towards the autonomous car, the forward hidden set retracts in the opposite direction, “pushed” away by the autonomous vehicle’s field of view.

The forward hidden set allows reasoning about current possible locations of hidden objects based on the history of observations obtained by the autonomous car so far, but it can also be extrapolated forward along any candidate future trajectory. This allows the autonomous car to reason about what potential objects would remain undetected in different candidate futures, depending on what trajectory it chooses (e.g. remain behind the truck or slowly nudge sideways to “peek” down the road).

How useful is this? The autonomous car cannot prevent another vehicle, pedestrian, etc. from being in a particular state, but in some cases it will be able to prevent them from being hidden in that state (“you can be there, but I will know”). This seems like a promising start, but it doesn’t guarantee safety by itself. What if a dangerous object is detected too late to avoid a collision with it?

Fortunately, the problem of avoiding a collision with an observed dynamic object is well studied: no matter how the newly-discovered object behaves, we can guarantee safety as long as the autonomous vehicle can avoid capture in a pursuit-evasion game. This allows us to compute the danger zone around the autonomous vehicle, which is the set of initial conditions from which an object (even if observed) could force an inevitable collision.

Danger zone (orange) around the autonomous vehicle, in x-y-v space. The oncoming vehicle (blue) is outside the danger zone if its speed is low (lower part of the set), and the autonomous car has enough time to merge behind the truck. Conversely, at high speeds (upper part of the set), the oncoming vehicle is inside the danger zone and a collision is inevitable. If a previously hidden vehicle is detected inside the danger zone, it is no longer possible to ensure safety.

This leads to the key theoretical—and, it turns out, also very practical—result in this work:

A candidate motion plan is safe with respect to potential occluded object as long as the future forward hidden set never overlaps with the danger zone.

This is an unsafe candidate trajectory because the forward hidden set and danger zone overlap at a future time: a potential hidden object could become impossible to evade before the autonomous vehicle can detect it.

This is a safe candidate trajectory because the forward hidden set and danger zone do not overlap at any future time: any potential hidden objects will be detected while they can still be avoided..

As long as our planned trajectories satisfy the above condition, there will always be a known safety fallback strategy that will preserve safety if and when a potentially dangerous object comes into the autonomous vehicle’s field of view.

This leads to a planning strategy that can be incorporated into an arbitrary trajectory planning algorithm by augmenting the traditional “collision check” routine to also reject overlaps between the forward hidden set and the danger zone.

The autonomous vehicle approaches the intersection assertively, after determining that no vehicle could emerge from behind the red truck on the right at a speed high enough to constitute a safety threat.

Representation of the intersection by the autonomous vehicle, with the danger zone separated into the yield (red) and go (blue) strategies. A vehicle has emerged from the forward hidden set (yellow) behind the truck, but—as guaranteed—is outside the danger zone.